To recap, we’re building a bot that given a list of YouTube channels, it’ll tweet out snippets of a newly posted video. Below is the list of planned activities:

Get list of my subscriptions from YouTube.Mark channels as music or blog and whether they’re Kenyan.- For each channel get a list of recent videos and store that in a database.

- Take the last video link and download the video.

- Clip the video to 40s.

- Tweet that video (title, link to YouTube)

- Save link to tweet, video in database

- Check every 30 minutes if there’s a new video, if yes start from 4.

In the previous post we managed to get the data from YouTube and save it into a csv file. I’ve subscribed to over 900 channels; a rather ridiculous number of channels I’ve been told.

Next, I went through the boring and tedious process (step 2 in our 8 point plan) of going through each of them and marking them as either Kenyan or not: if Kenyan, were they music or uncategorised.

Today we are going to move our marked data into a database. From here on out we’ll be using the database whenever we can, unless it’s easier to do otherwise. If you’d like to follow along with the code, you can find them in the repo: https://github.com/princelySid/yt-bot. This whole post will be talking about code on the list_videos_db branch so switch to that.

Once you’re on the right branch, you’ll need to run the command pipenv install --dev to install all the dependencies and then run the tests: pipenv run pytest. The output should look like this:

Let’s talk about the new dependencies we have added from the last post and their purposes:

sqlalchemy– interacting with databases.sqlalchemy-utils– custom data types and various utility functions for SQLAlchemy.python-dateutil– datetime utilitiesfeedparser– parsing atom and RSS feeds

We’ve also added 2 new environment variables to our .env file:

DB_PATH– path to the database’s location. Given that we’re using a sqlite database, it will be a file in one of the folders. I’d suggest the data folder we have our csv files in.DB_URI– string that we use to connect to the database, remember it should look like thissqlite:////{DB_PATH}

With all of this done, let’s look at our objective for step 3 again: For each channel get a list of recent videos and store that in a database. We’re going to break this into further sub steps:

- Move channel data into database.

- for each channel in database

- parse the feed of that channel

- get list of videos in the database that are from that channel

- for each video in the feed if that video is not in the database add that video to the database

Move channel data into the database

If you’ve done the steps correctly up to this point, all you have to run is pipenv run python data/add_channels_db.py. You should have an output that looks similar to this:

But let’s talk a little about what’s going on in add_channels_db.py

db_uri = getenv('DB_URI')

if not database_exists(db_uri):

create_database(db_uri)These lines get our database uri string, checks if the database exists; if it doesn’t, the database is created.

db = Database(db_uri)

db.create_tables(Base.metadata)The first line creates a Database object that I use for various database functions, primarily for session management. The second line creates the tables: channels and videos. This is because as we imported them they share the same Base object.

uncategorised = CSVService.read_csv_list_dict('data/ke_blog.csv')

music = CSVService.read_csv_list_dict('data/ke_music.csv')

for row in uncategorised:

row['category'] = 'uncategorised'

for row in music:

row['category'] = 'music'Theses line read in our csv files and in each case assign a new field called category, either music or uncategorised.

music_ids = {x['channel_id'] for x in music}

de_music = [channel for channel in uncategorised

if channel['channel_id'] not in music_ids]

music.extend(de_music)These lines combine our lists of channels together while making sure that there aren’t duplicates by dropping any channels marked as music that might be in labeled uncategorised.

with db.session() as session:

session.bulk_insert_mappings(Channel, music)

session.commit()This section added our combined list to the database.

Get videos for each channel

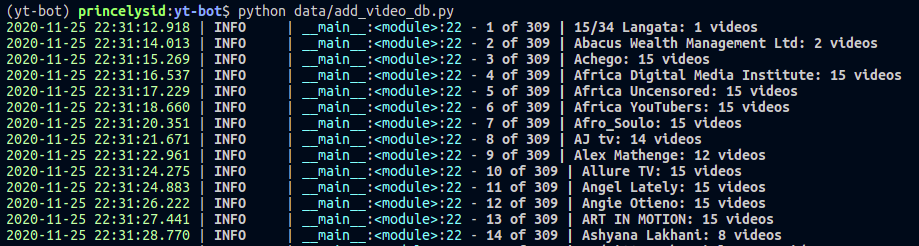

To do this, run pipenv run python data/add_video_db.py. The program can be found here. This will take some time because it takes about a second to parse and save each feed. Your terminal should look like this:

The main logic of the program is found in lines 13 to 37. Let’s step through what’s going on in the code.

channels = session.query(Channel)

total = channels.count()The first line pulls in the channel data that we saved in the last step and the second just gets the number of channels that we have. I use this count in the log to let us know how many channels we’re going through.

for idx, channel in enumerate(channels, start=1):All the code that follows happens within this loop. It loops through each channel in our channels and idx here is used track which channel we’re on in the overall count.

channel_id = channel.channel_id

video_ids = channel_video_ids(session, Video, channel_id)

rss_link = rss_feed(channel_id)

feed = format_feed(feedparser.parse(rss_link))

feed = filter_feed(feed, video_ids)There’s 5 lines and 4 functions here.

channel_video_idsgoes in to the database and gets any video_ids that we already have for that channel.- rss_feed in the first line takes the channel id and returns a link to the rss feed.

format_feedusing the link from last link and parses then formats the feed so that it can work with our database structure.filter_feedensure the feed that will only contain videos that are not in our database.

For this next section, instead of going into what the code is doing line by line I’ll just give a general description.

if feed:

channel_ids = set()

for entry in feed:

is_diff = entry['channel_id'] != channel_id

is_new = entry['channel_id'] not in channel_ids

is_in_db = session.query(Channel).filter(Channel.channel_id==entry['channel_id']).first()

if is_diff and is_new and not is_in_db:

channel_ids.add(entry['channel_id'])

_id = entry['channel_id']

logger.info(f'New Channel: {_id} | {channel.name}')

feed = [entry for entry in feed if entry['channel_id'] == channel_id]What this code does is to check for instances when there are new videos in the feed, then checks if the channel_id that is registered to the video is different to one that we’re supposed to be looking at(is_diff), and also checks whether we’ve not seen that different channel_id before in this feed(is_new) and it’s not in the db(not is_in_db).

If all that is true we log that we’ve not seen that channel_id before. This means that if we look at the log we’ll be able to see these channel_ids and check if they should be added to our database.

Finally we filter the feed for video that are only for the channel_id that we’re looking for so that we’re adding the videos to the right channel_ids.

if feed:

logger.info(f'{idx} of {total} | {channel.name}: {len(feed)} videos' )

video_db(session, Video, feed)These lines just mean that if the last section has any data, first log how far we are, which channel we’re on and how many new videos there are. The last line saves the data to the database.

Next part looking at our 8 point plan is step 4: take the last video link and download the video but I’m wondering if it might be a better idea to skip that step, and the one after it and got to step 6: tweet that video (title, link to Youtube).

There’s a couple of reasons for this. This part is very hard to test and get right exactly. And I’m travelling to my family’s country home in Siaya until New Year’s Day and the lack of unlimited broadband there will make it expensive to be downloading videos constantly.

I’ll have a discussion with theOkelo, editor of this blog and writer of highly technical electrical engineering posts here, on what next steps should be. Perhaps we’ll take a break from this series until next year.

Thank you for taking the time to read all this. If you have any questions feel free to reach out in the comments or on twitter. Peace!